Technology moves fast, but some basic rules never change. Whether you’re using an ancient abacus or the latest smartphone, certain truths about how computers and technology work remain the same.

Understanding these unchanging principles helps us make better decisions about the tech we use every day.

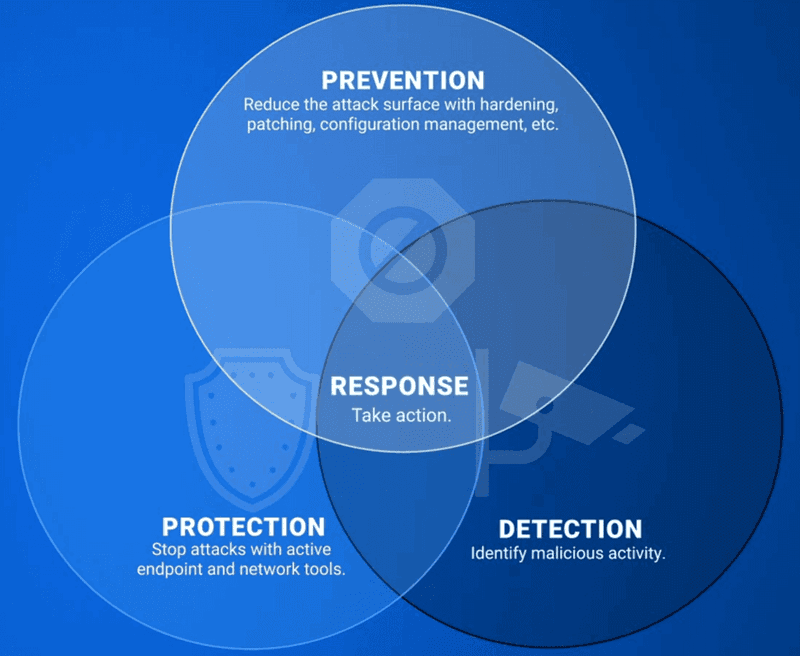

1. Perfect Security Doesn’t Exist

Every computer system has weaknesses, no matter how well-protected it seems. Hackers constantly find new ways to break into systems that experts thought were safe.

Smart users focus on making attacks harder rather than impossible. They use strong passwords, update software regularly, and stay alert for suspicious activity.

Security works best when multiple layers protect your data.

2. Powered-Off Computers Aren’t Completely Safe

Turning off your computer doesn’t guarantee complete safety from all threats. Physical access to hardware can reveal stored information even when machines aren’t running.

Skilled attackers can extract data from memory chips or hard drives using special tools. Some malware even survives in computer firmware that stays active during shutdown.

True security requires both digital protection and physical safeguards.

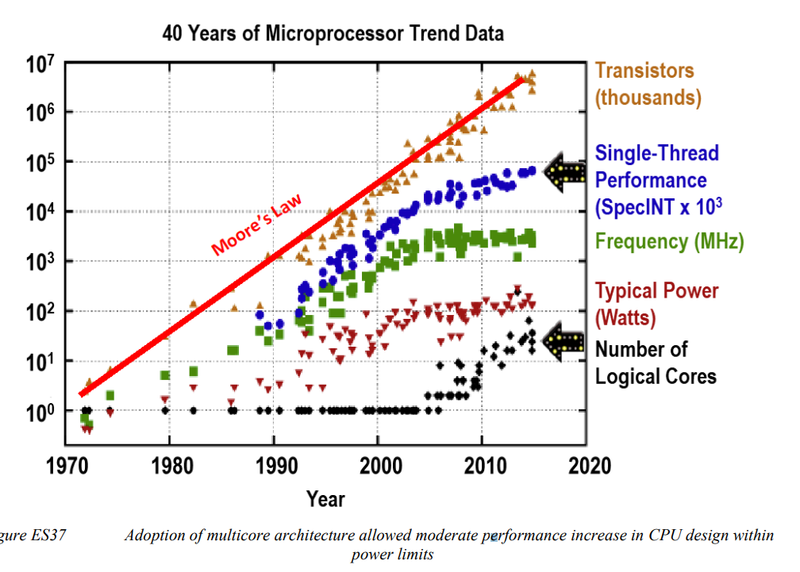

3. Moore’s Law Is Slowing Down

For decades, computer chips doubled in power every two years like clockwork. This amazing pattern helped create smartphones, gaming systems, and lightning-fast laptops.

Now scientists struggle to make transistors smaller because they’re approaching the size of individual atoms. Physics itself creates limits that money and creativity can’t easily overcome.

Future improvements will come from smarter designs rather than raw speed increases.

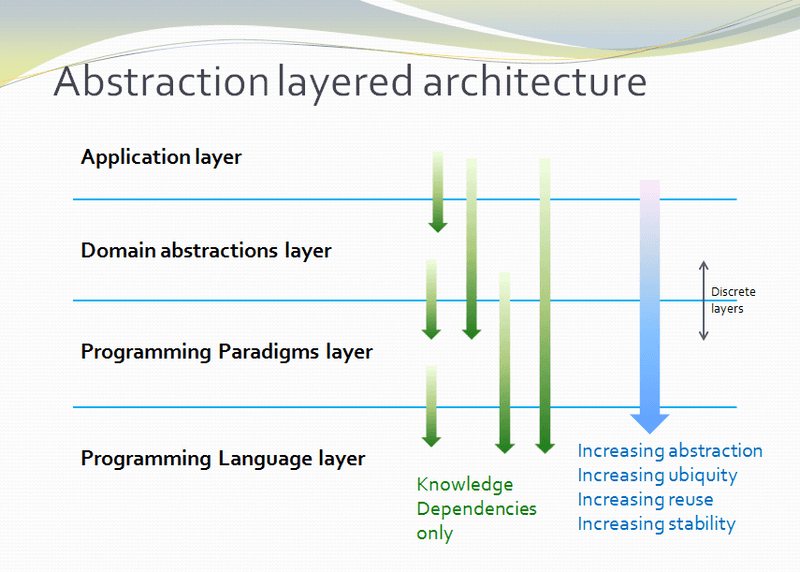

4. Every Abstraction Leaks

Software layers hide complex details to make programming easier, but they never hide everything perfectly. Underlying systems always show through in unexpected ways.

A simple photo app might suddenly crash because of memory problems deep in the operating system. Web browsers sometimes behave differently because of hardware quirks.

Understanding lower levels helps solve mysterious problems when abstractions break down.

5. Garbage In, Garbage Out

Computers process whatever information you give them, whether it’s accurate or completely wrong. Bad data creates bad results, no matter how sophisticated the software.

A weather app with broken sensors will give terrible forecasts. Social media algorithms trained on biased information will make unfair recommendations.

Quality output always starts with quality input and careful data validation.

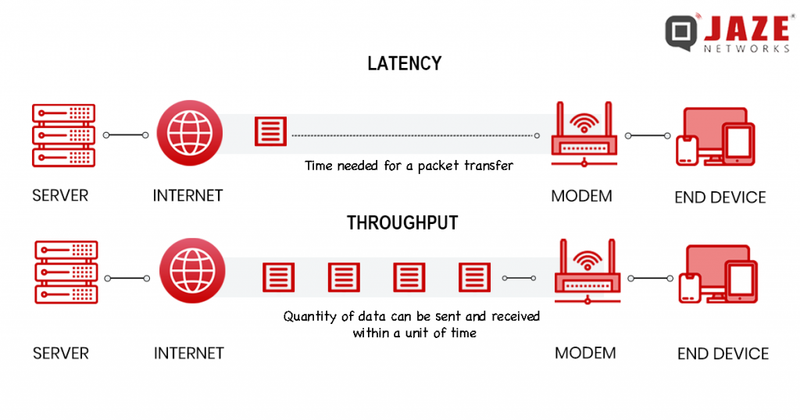

6. Latency Differs From Bandwidth

Bandwidth measures how much data flows through a connection, while latency measures how long each piece takes to arrive. Both matter for different reasons.

Streaming movies needs high bandwidth to avoid buffering. Online gaming requires low latency so controls respond instantly.

A fire hose has great bandwidth but terrible latency for delivering a single drop of water quickly.

7. Time Is Unreliable in Computing

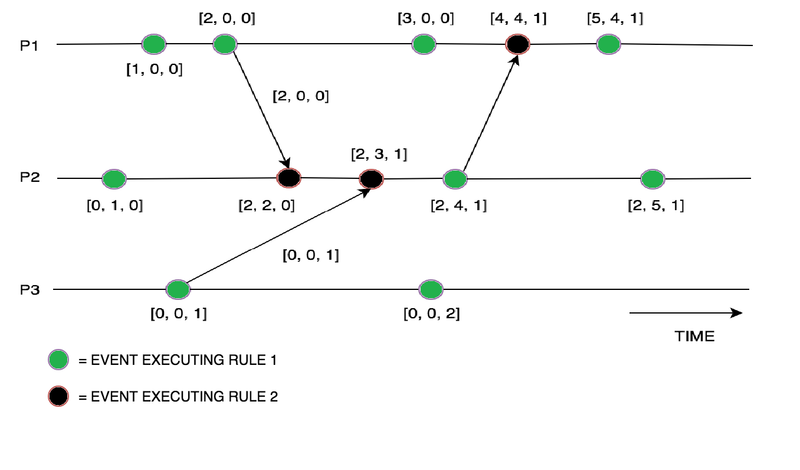

Coordinating time across multiple computers creates surprising challenges. Different machines have slightly different clocks that drift apart over time.

Network delays mean messages arrive out of order, making it hard to know what happened first. Time zones and daylight saving changes add more confusion.

Distributed systems use clever tricks like logical timestamps to work around these timing problems.

8. Speed of Light Limits Everything

Information cannot travel faster than light, creating unavoidable delays in global communications. Signals take about 67 milliseconds to travel halfway around Earth through fiber optic cables.

This delay affects everything from video calls to financial trading systems. Satellite internet has even longer delays because signals travel to space and back.

Physics sets hard limits that engineering cannot overcome.

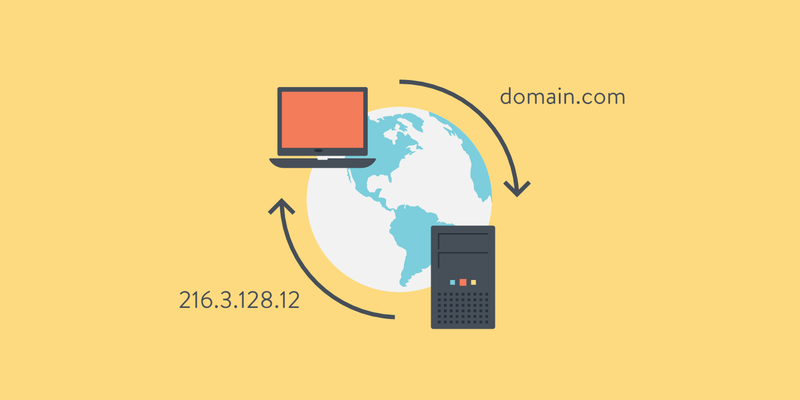

9. DNS Takes Time to Propagate

When you type a website address, computers must look up the actual server location using the Domain Name System. This lookup process isn’t instant.

Changes to DNS records can take hours or days to spread across all internet servers worldwide. Cached old information sometimes causes websites to appear broken temporarily.

Patience helps when website changes don’t appear immediately for all users.

10. True Randomness Doesn’t Exist in Computers

Computers follow precise mathematical rules, making truly random numbers impossible to generate. Instead, they create pseudo-random sequences that appear random but follow predictable patterns.

Good random number generators use unpredictable inputs like mouse movements or atmospheric noise. Weak randomness can break encryption and make passwords guessable.

Critical security applications often require special hardware to generate better randomness.

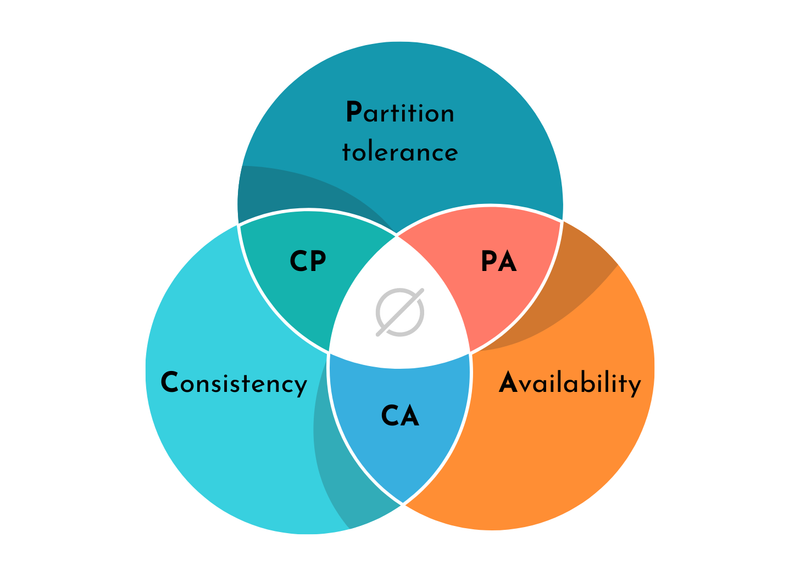

11. CAP Theorem Forces Difficult Choices

Distributed systems cannot guarantee consistency, availability, and partition tolerance simultaneously. Network failures force designers to choose which properties matter most.

Banking systems prioritize consistency to prevent money from disappearing. Social media platforms choose availability so users can always post updates.

Understanding these trade-offs helps explain why different online services behave differently during outages.

12. All Software Contains Bugs

Every program has mistakes hiding somewhere in its code. Complex software might contain thousands of unknown bugs waiting to cause problems.

Even simple programs can behave unexpectedly when users do something the programmer never considered. Testing helps find many bugs, but finding every single one is practically impossible.

Good software design assumes bugs exist and includes ways to handle them gracefully.

13. Users Are Right Until They’re Wrong

Customer feedback provides valuable insights about real-world software usage. Users often discover problems and use cases that developers never imagined.

However, users don’t always understand technical limitations or security implications of their requests. Sometimes what users want would actually make their experience worse.

Balancing user desires with technical reality requires careful listening and clear communication.

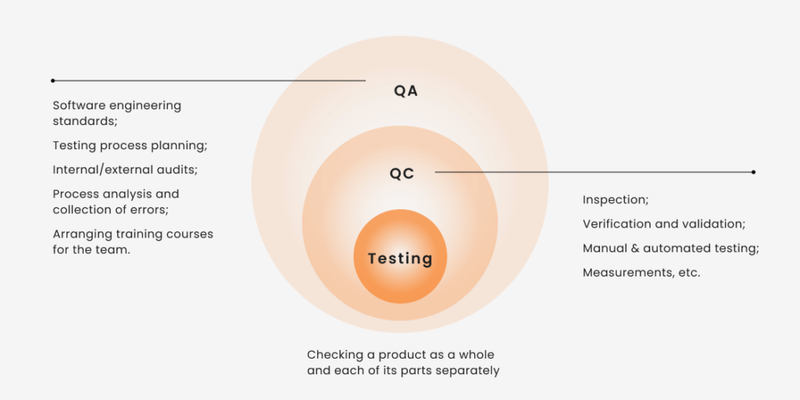

14. Untested Code Is Broken Code

Software that hasn’t been tested properly will fail when users need it most. Bugs hide in code paths that seem obvious but never get checked.

Automated tests catch problems before they reach customers. Manual testing reveals usability issues that automated tools miss.

Investing time in testing saves much more time fixing problems later. Quality assurance prevents embarrassing failures and frustrated users.

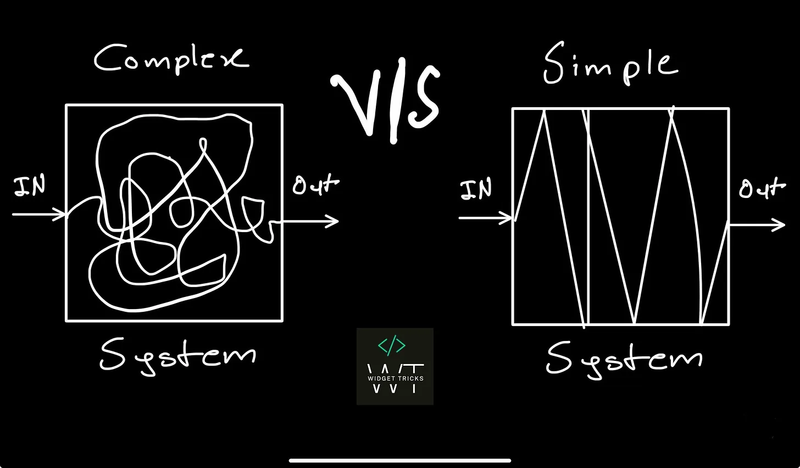

15. More Code Creates More Problems

Every line of code added to a program increases the chances of introducing new bugs. Large codebases become harder to understand and maintain over time.

Simple solutions often work better than complex ones because they have fewer failure points. Experienced programmers know when to add features and when to remove unnecessary complexity.

Sometimes the best code is the code you don’t write.

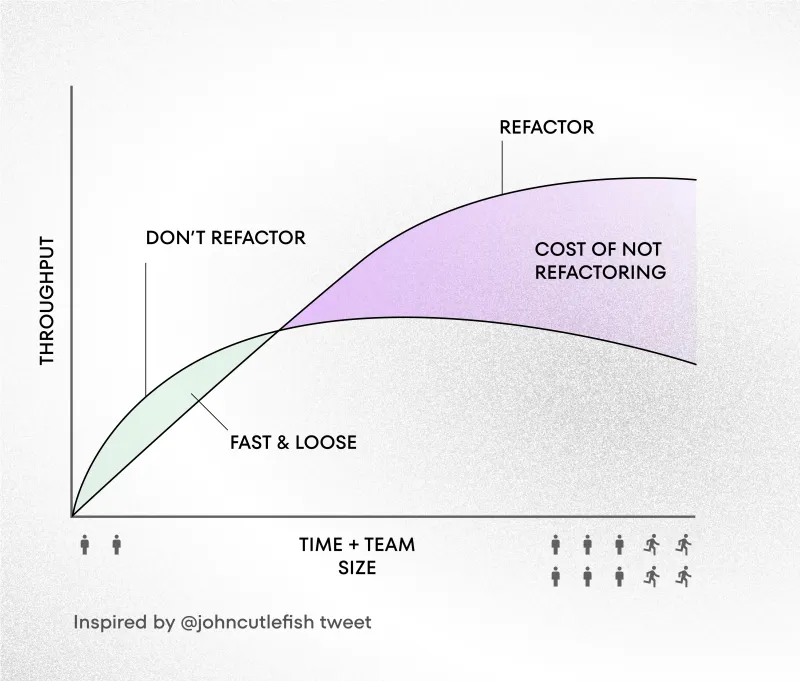

16. Technical Debt Always Comes Due

Quick fixes and shortcuts in code create technical debt that must be repaid later with interest. Rushed solutions often cause bigger problems down the road.

Like financial debt, technical debt accumulates over time and slows down future development. Teams that ignore technical debt eventually spend more time fighting old problems than building new features.

Regular refactoring helps keep debt manageable.

17. The Cloud Is Someone Else’s Computer

Cloud computing simply means running your programs on computers owned by other companies. Your data lives in massive data centers operated by cloud providers.

This arrangement offers convenience and cost savings but creates new dependencies. When cloud services go down, your applications stop working too.

Understanding cloud limitations helps make better decisions about what to put in the cloud.

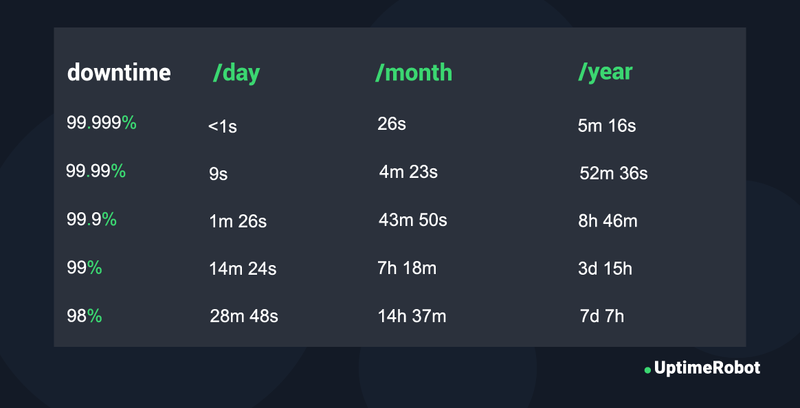

18. 999% Uptime Is Nearly Impossible

Five nines uptime means systems can only be down for about five minutes per year. This extremely high standard requires redundant hardware, perfect software, and flawless operations.

Power outages, network failures, and human errors can destroy months of perfect uptime in seconds. Even the most reliable systems experience unexpected problems.

Most applications work fine with lower availability targets that cost much less to achieve.

19. Compression Always Involves Trade-offs

Making files smaller always costs something, whether it’s image quality, processing time, or storage space. Lossless compression keeps perfect quality but achieves smaller size reductions.

Lossy compression creates much smaller files by throwing away information you hopefully won’t miss. Video streaming services constantly balance file size against visual quality.

Choosing the right compression depends on your specific needs and constraints.

20. Software Runs Slower on Slower Hardware

This might seem obvious, but many people expect software to run the same speed everywhere. Older computers with less memory and slower processors struggle with modern applications.

Mobile apps must work on both high-end flagship phones and budget devices with limited resources. Web browsers vary dramatically in performance across different devices.

Good software adapts to available hardware rather than assuming unlimited resources.

21. Most Data Never Gets Accessed Again

Studies show that most files are accessed frequently when first created, then rarely or never touched again. Old emails, photos, and documents pile up in digital storage.

This pattern influences how storage systems work, keeping frequently accessed data readily available while moving old data to cheaper, slower storage.

Understanding access patterns helps design more efficient and cost-effective storage solutions.

22. No Backup Means No Mercy

Hard drives fail, phones get dropped, and laptops get stolen. Without backups, years of photos, documents, and memories can disappear forever in an instant.

Automatic cloud backups make protection easier than ever before. Multiple backup copies in different locations provide even better protection against disasters.

Learning this lesson the hard way means losing irreplaceable data that can never be recovered.

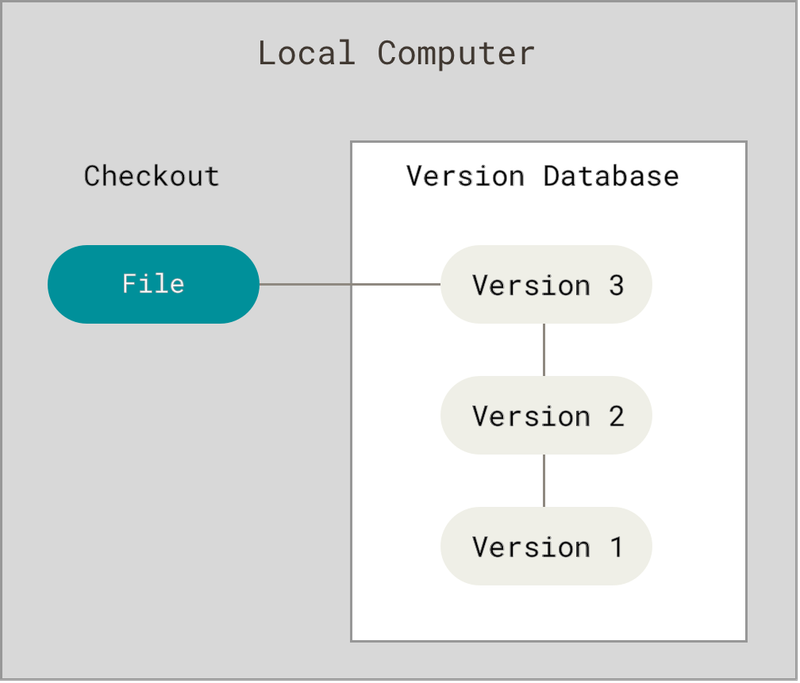

23. Version Control Is Essential

Tracking changes to code and documents prevents countless headaches when things go wrong. Version control systems remember every modification and let you undo mistakes easily.

Collaboration becomes much easier when everyone can see what changed and who changed it. Branching allows multiple people to work on different features simultaneously.

Professional developers consider version control as fundamental as having a keyboard and monitor.

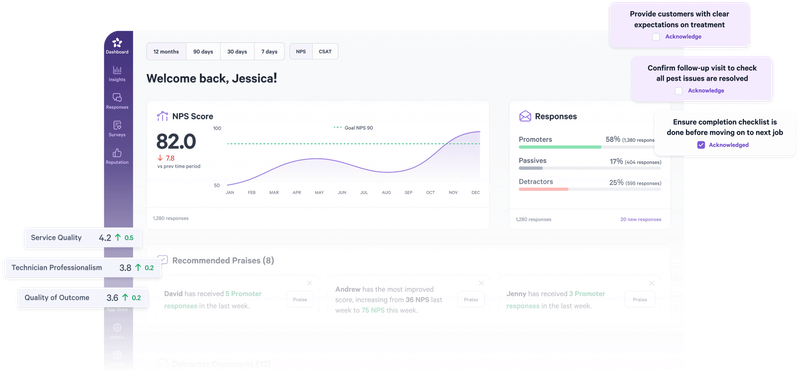

24. Measurement Enables Optimization

Guessing about performance problems usually leads to wasted effort optimizing the wrong things. Measuring actual behavior reveals where bottlenecks really occur.

Profiling tools show which parts of programs consume the most time and memory. User analytics reveal how people actually use features versus how designers intended.

Data-driven optimization produces much better results than intuition alone.

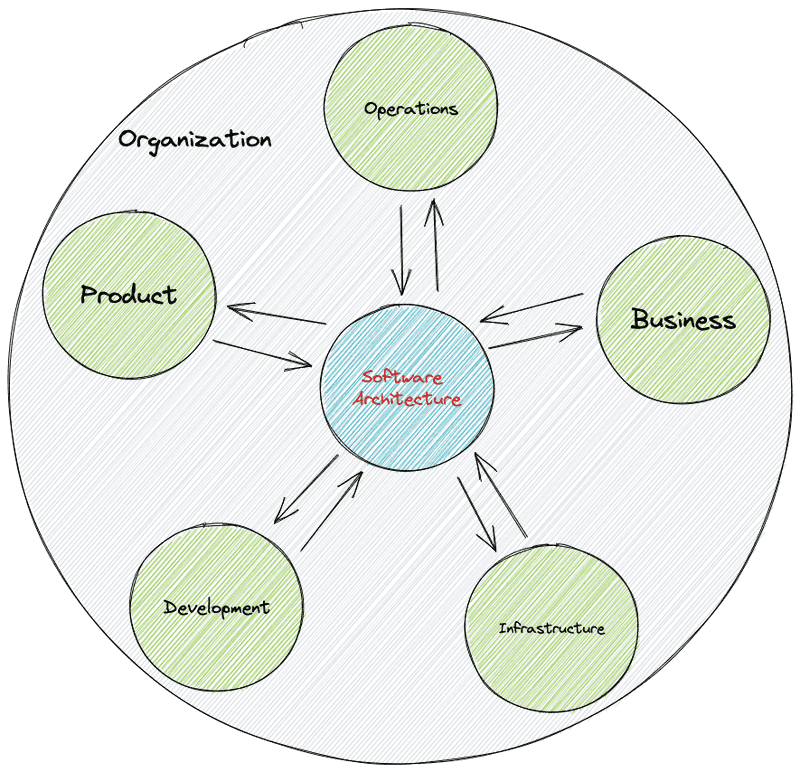

25. Perfect Software Architecture Doesn’t Exist

Every architectural decision involves trade-offs between competing goals like performance, maintainability, and cost. What works perfectly for one project might be terrible for another.

Requirements change over time, making yesterday’s perfect design inadequate for today’s needs. Good architecture adapts to change rather than trying to predict every future requirement.

Experience teaches when to over-engineer and when to keep things simple.